Background

Being a technical blogger, a data dork and an organized digital hoarder does have its advantages, although my wife would probably argue that point! She just makes statements to her friends like this:

Yes, he is a dork, and I do have to live with this all the time.

She will call me Mr Max Data Dork on the days I dress like this (Figure 1). I know that I deserve that! It’s worth it since I know that I have a supercomputer in my backpack coupled with two incredible software packages called Alteryx and Tableau. With that backpack, I feel like I can do anything with data!

Figure 1 – If you are reading this article, you must be a lover of data. I give you the permission to admit your passion and don’t be ashamed of it any longer! It is possible to be a data dork and still get an awesome wife!

This Is A Special Article For Me

When Tableau 10.5 was released, I knew that this special article was born. Why is it special? Well, it is my 300th article on 3danim8’s Blog, and I couldn’t think of a better way to celebrate completing another 100 blog articles by going back to my Tableau roots.

I awoke this morning with this article formed in my head. I have no control over how this happens, but I do control whether I react to these stories by taking the time to do the work and write the stories. In this case, I’m going to do the work and write the results.

I have chosen to write this one without having completed any of the work. Just like in my Tableau Vs Power BI series, I have no prior knowledge of the outcome as I write these words. The only work I have done so far is to find the old hard drive that held some data files I previously used to benchmark Tableau, as I will explain.

It Was 1,530 Days Ago When…

I published this Tableau benchmark article on Nov 4, 2013. For the record, I didn’t really expect anyone to read this one.

Back then, I believed that the Tableau development team and only true Tableau computational nerds would show interest in an article like this. I thought a few guys like Joe Mako, Jonathan Drummey, and Russell Christopher were possible readers when I hit the publish button. We didn’t have as many computational Tableau bloggers back then like there are now.

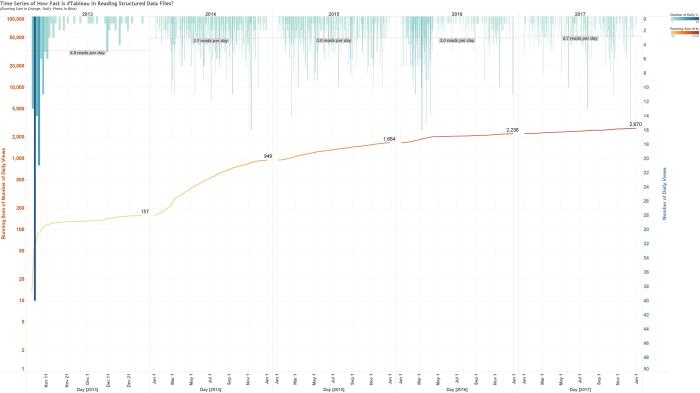

Much to my surprise, as shown in Figure 2, this is one of those articles that has stuck around over time. With about 3 reads per day on average over the past 4.5 years, this article continues to be interesting to people. With 2,670 reads since it was written, this example conclusively proves to me that I have no idea what I’m doing writing a blog. It amazes me that so many articles like this one can have long-term readership.

Figure 2 – How Fast is #Tableau in Reading Structured Data Files? This was published on November 4, 2013. Click on graphics for full scale images.

In this article, I published a series of 15 benchmark examples using Tableau version 8.0.5. These benchmarks were intended to determine how fast Tableau could ingest csv files and turn them into Tableau data extracts (tdes). I also examined the compression ratios achieved for the different types of files.

In a strange twist of fate, my place of employment has an ongoing program named “Hyper Ingestion” of data, in which large volumes of data are being added to a big data system. Sometimes this program can give us “Hyper Indigestion” because we can’t get to the data we need when we need it because it hasn’t been “consumed”.

For this study, I’m going to determine if the new Tableau “Hyper” data engine will give me “High Performance” or will it give me “Hyper Indigestion”. Let the games begin.

Benchmark II

In this test, I am going to reproduce the results using my original fifteen test cases. I might even go deep and hit Tableau with some of my big files. My goal is to quantify how much improvement occurs when using Hyper. I want to know how much faster Tableau can create a hyper extract compared to what it could do when creating a “tde”. Once that is done, I might even do some performance testing on using the extracts in a head-to-head comparison.

Why would I want to do this? I do it because I love this stuff. For me, things like this take me back to the beginnings of my studies of numerical calculus, numerical analysis, computational efficiency, etc. The years I worked writing computational algorithms for solving partial differential equations gave me some insight into what it takes to drive technology forward. Tableau is great at bringing us new technologies that make us better at our jobs.

I believe inTableau. I believe in the development team. Even though I haven’t done any work on this, I am confident that the results will be impressive when article number 301 gets written sometime soon. If the outcome isn’t what I expect it to be, I’ll be shocked.

I’m excited to do the work, so if you are interested, consider subscribing to this blog to follow my next 100 articles. As shown in Figure 3, you never know what is going to get cooked up in the mind of this data dork!

Figure 3 – A few people that I really respect have gently told me that this blog is a bit too technical and complicated. Well, all I have to say is this: Tableau is one of two of my favourite data engines. If you are going to have sports cars, you better be willing to drive them. If not, what is the point of having them? Finally, where did I find those Pi estimators? I found them in the Excel worksheet from Nov 4, 2013, which contained the original benchmark results. Clearly, I was thinking about hitting Tableau with something more than just csv files, even back then.

Final Thoughts

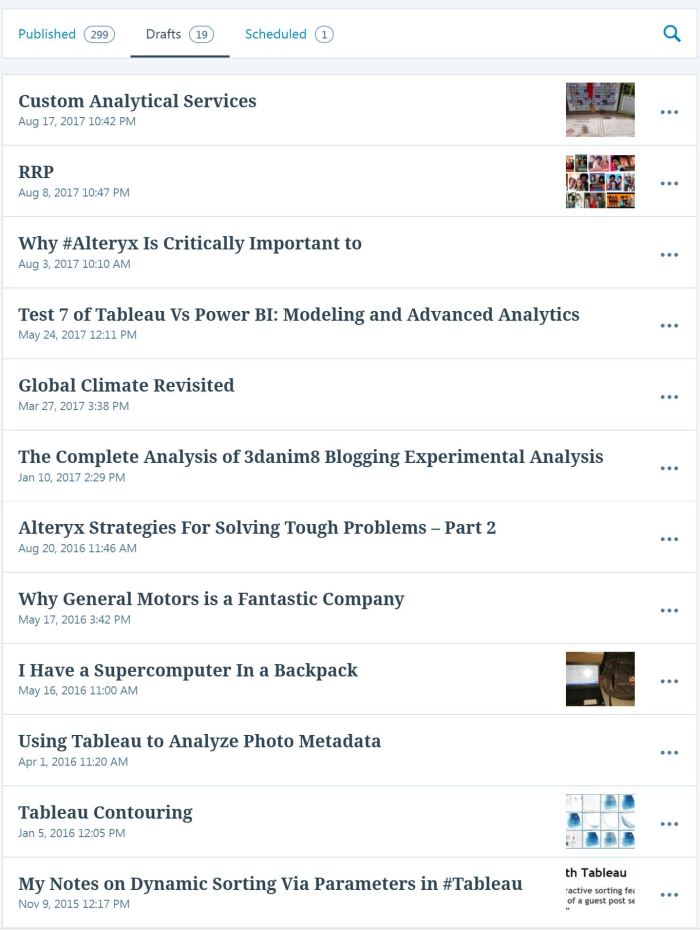

Writing a blog like this doesn’t always go according to plan. Figure 4 shows that there are almost 20 draft articles I have started writing but I have not yet finished. Motivations change, life gets in the way, and work takes precedence over blogging. All of these things collide on a daily basis to slow me down from achieving my vision and satisfying my curiosity.

The only piece of advice I can give to people interested in doing this type of “free” work is to keep chugging along, and after a sufficient amount of time has passed, you can look back and see where you have been. You will see your own progress, even though not too many people will not look at your work. There is satisfaction in completing each article and sharing your knowledge.

Figure 4 – 299 articles down, 1 scheduled and 19 incomplete. Life isn’t perfect but just do the best you can!

Benchmark 2 Is Now Published

It took me a couple of weeks to finish, but now I have published the results of the benchmark study. Click here to read it.

Eagerly awaiting #301

What a cliff-hanger! I was expecting to see some hint of the results, but I will have to wait for the next post. I was fortunate to attend my first Tableau conference last October, and saw the side-by-side live demo of Hyper and TDE in the data arena. That was pretty impressive.

In addition to benchmarking Tableau against itself, are you considering benchmarking 10.5 against other solutions, such as Power BI?

Hi Gary,

Yes, a cliff-hanger! I don’t think I’ve ever succeeded in writing one of those.

Well Gary, you have given me some inspiration! I might just have to expand the scope of this work to include a few other items. For now, I’ll leave that vague, but trust me when I tell you that I’ve got a few things cooking on this one.

Ken

Ken

as I could not find any detailed technical article about Hyper, do you know if dimension modelling will be eventually available in Tableau, I came from a PowerBI/PowerPivot background, and in my current job, they are using Tableau, I find it very frustrating that Tableau do not have a “proper” data model like PowerBI or Qlik.

joining multiple data sources with different granularity is more of a hack, Blending is very limited, I am sure, I am missing something as a lot of users seems happy with their Tableau usage.

Hi Mim,

I suspect that there are a lot of people that share the feelings you have about the lack of a data model in Tableau.

When I matured to a point in my career where I needed advanced data joining operations, I was learning to do it in Alteryx. When Microsoft tried and failed to teach me Power BI, I felt frustrated by that type of data model. I think that we feel most comfortable in the tools we learn to use first.

Historically, Tableau has not really been designed to be a fully operational data tool like Alteryx or other data manipulation packages. The degree of sophistication of what can be done to data in Tableau Is rapidly escalating with the emergence of project Maestro. I think thatTableau is addressing a primary deficiency of their tool.

Considering the excellence of what they have already accomplished, I suspect that they will produce a first – class data manipulation and preparation package of great capability.

Ken

A total tease Ken! Congrats on 300 articles- please keep up the writing and don’t change your style. Can’t wait to see the benchmark results and if Hyper lives up to the hype

Hi Jason,

If I can figure out a way to elminate the real work I have to do everyday, I’d be able to complete this testing. I can’t wait to finish it. I hope to do it this weekend!

Ken